As an extension of my series of posts on handling IoT security camera images with a Serverless architecture I’ve extended the capability to integrate AWS Rekognition

Amazon Rekognition is a service that makes it easy to add image analysis to your applications. With Rekognition, you can detect objects, scenes, and faces in images. You can also search and compare faces. Rekognition’s API enables you to quickly add sophisticated deep learning-based visual search and image classification to your applications.

My goal is to identify images that have a person in them to limit the number of images someone has to browse when reviewing the security camera alarms (security cameras detect motion – so often you get images that are just wind motion in bushes, or headlights on a wall).

In order to accomplish we need to update one of the lambda functions to execute Rekognition when the image arrives. The updated function can be found here.

The important bits are here:

def get_rekognition_labels(object_key, object_date, timestamp):

"""

Gets the object rekognition labels for the image.

:param object_key:

:return:

"""

bucket = 'security-alarms'

client = boto3.client('rekognition')

request = {

'Bucket': bucket,

'Name': object_key

}

response = client.detect_labels(Image={'S3Object': request}, MaxLabels=10)

write_labels_to_dynamo(object_key, object_date, response, timestamp)

def write_labels_to_dynamo(object_key, object_date, labels, timestamp):

dyndb = boto3.resource('dynamodb')

img_labels_table = dyndb.Table('security_alarm_image_label_set')

for label_item in labels['Labels']:

save_data = {

'object_key': object_key,

'label': label_item['Name'],

'confidence': Decimal(str(label_item['Confidence'])),

'event_ts': int(timestamp),

'capture_date': object_date

}

img_labels_table.put_item(Item=save_data)

# end For

As you can see, invoking the Rekognition API is 2-3 lines of code – you simply tell it where the image lives in S3 and how many labels (identified objects, scenes, items, etc) you’d like back.

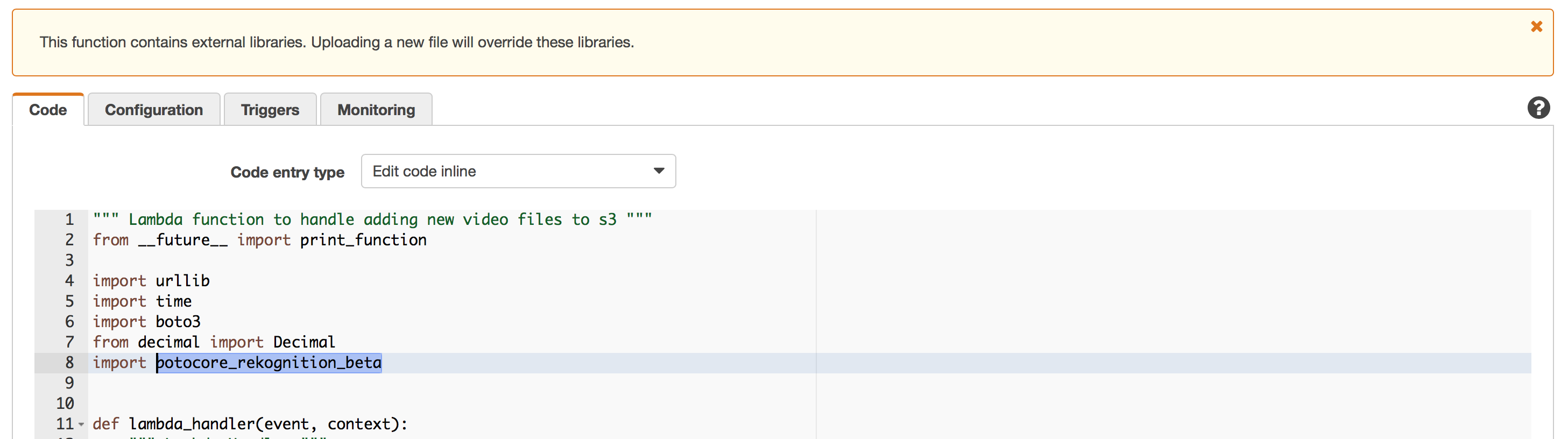

One quick note – Rekognition currently requires a special python package: botocore_rekognition_beta – you’ll notice this big old warning on your lambda function:

Suffice it to say – if you are uploading your python code you’ll have issues here.

We then have a simple function to iterate over the labels and write them to Dynamo.

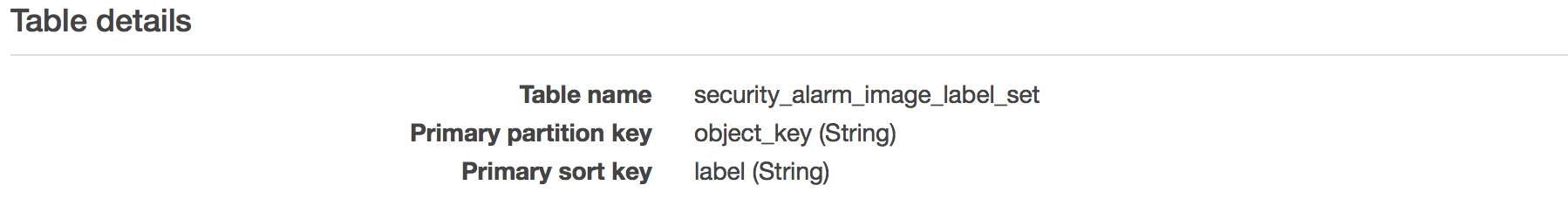

The Dynamo table is configured as follows:

This configuration allows us to get the labels via the API and display them alongside the image like so:

We will come back to the images and the labels later…

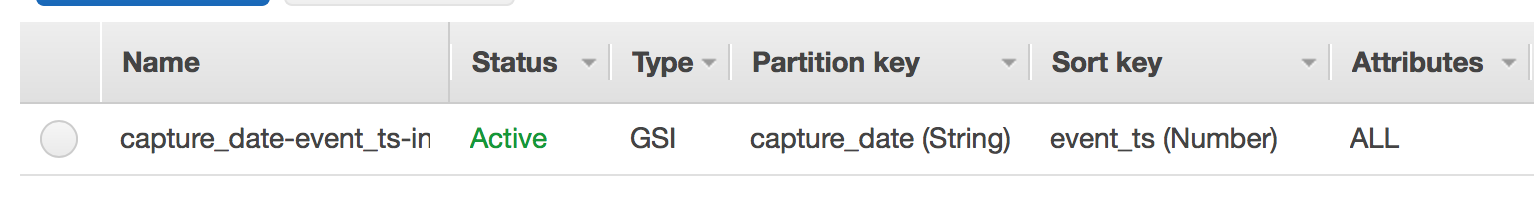

I’ve also added a Global Secondary Index to allow for periodic updates of the analytics graph:

This index allows us to do periodic updates of the graph by querying the table and reading forward from the last processed row using a checkpoint value.

The script that loads the table can be found here.

This script performs a full load of the table if no checkpoint is stored in S3. If the checkpoint file is found in S3 it processes from that checkpoint forward using the GSI above.

I won’t go into all the detail of how that is implemented (I assume you can read the Python – or at least the logging messages in the code). If you’d like to know more about how this is implemented by all means contact me.

It is worth considering, however, how the graph is created:

def process_row_to_graph(object_key, label_name, confidence, event_ts=0):

camera_name = parse_camera_name_from_object_key(object_key)

if camera_name != 'garage' and camera_name != 'crawlspace':

date_info = parse_date_time_from_object_key(object_key)

add_camera_node = 'MERGE(this_camera:Camera {camera_name: "' + camera_name + '"})'

add_image_node = 'MERGE(this_image:Image {object_key: "' + object_key + \

'", isodate: "' + date_info['isodate'] + \

'", timestamp: ' + str(event_ts) + '})'

add_label_node = 'MERGE(this_label:Label {label_name: "' + label_name + '"})'

add_isodate_node = 'MERGE(this_isodate:ISODate {iso_date: "' + date_info['isodate'] + '"})'

add_year_node = 'MERGE(this_year:Year {year_value: ' + date_info['year'] + '})'

add_month_node = 'MERGE(this_month:Month {month_value: ' + date_info['month'] + '})'

add_day_node = 'MERGE(this_day:Day {day_value: ' + date_info['day'] + '})'

add_hour_node = 'MERGE(this_hour:Hour {hour_value: ' + date_info['hour'] + '})'

relate_image_to_label = 'MERGE (this_image)-[:HAS_LABEL {confidence: ' + str(confidence) + '}]->(this_label)'

relate_image_to_camera = 'MERGE (this_camera)-[:HAS_IMAGE {timestamp: ' + str(event_ts) + '}]->(this_image)'

relate_image_to_timestamp = 'MERGE (this_image)-[:HAS_TIMESTAMP]->(this_isodate)'

relate_image_to_year = 'MERGE (this_image)-[:HAS_YEAR]->(this_year)'

relate_image_to_month = 'MERGE (this_image)-[:HAS_MONTH]->(this_month)'

relate_image_to_day = 'MERGE (this_image)-[:HAS_DAY]->(this_day)'

relate_image_to_hour = 'MERGE (this_image)-[:HAS_HOUR]->(this_hour)'

full_query_list = add_camera_node + "\n" + \

add_image_node + "\n" + \

add_label_node + " " + \

add_isodate_node + " " + \

add_year_node + " " + \

add_month_node + " " + \

add_day_node + " " + \

add_hour_node + " " + \

relate_image_to_label + " " + \

relate_image_to_camera + " " + \

relate_image_to_timestamp + " " + \

relate_image_to_year + " " + \

relate_image_to_month + " " + \

relate_image_to_day + " " + \

relate_image_to_hour

neo_session = driver.session()

tx = neo_session.begin_transaction()

tx.run(full_query_list)

# END FOR

tx.commit()

neo_session.close()

return True

# print("Object: " + object_key + " written.")

# FIN

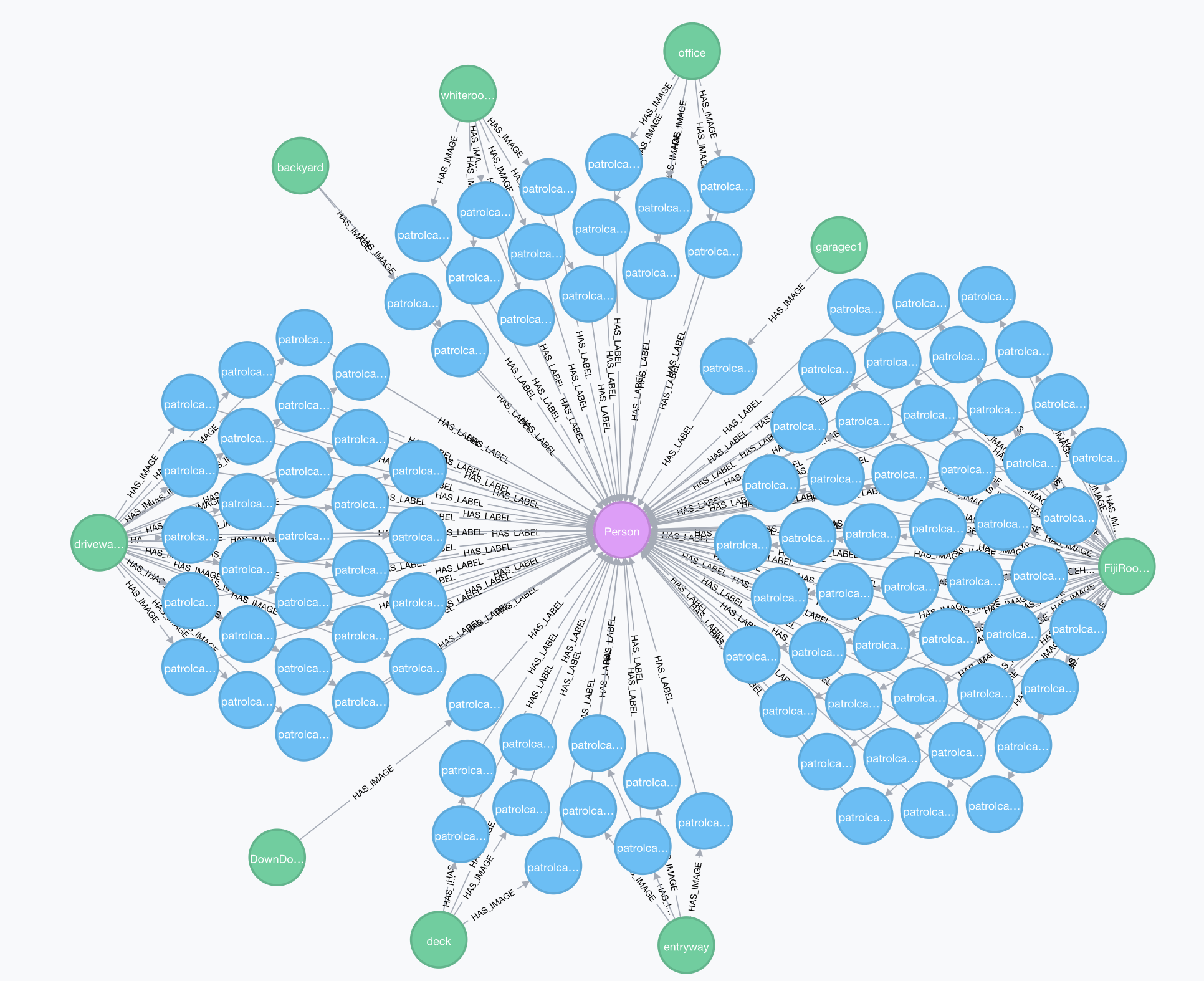

This code creates nodes for:

- Cameras

- Images

- Labels

- The ISO Date of the Image

- Year of the Image

- Month of the Image

- Day of the Image

- Hour of the Image

The image nodes have a timestamp property with a UNIX timestamp (seconds since epoch) which will allow us to identify images in a specific date/time range.

The edges between the image nodes and the label nodes have a property with the label confidence value – allowing us to filter or segment our analysis by the confidence Rekognition had in the label.

The year, month, day and hour nodes allow us to do comparisons of roll ups within them (compare November 2016 to December 2017 – or compare ALL Novembers to ALL Decembers).

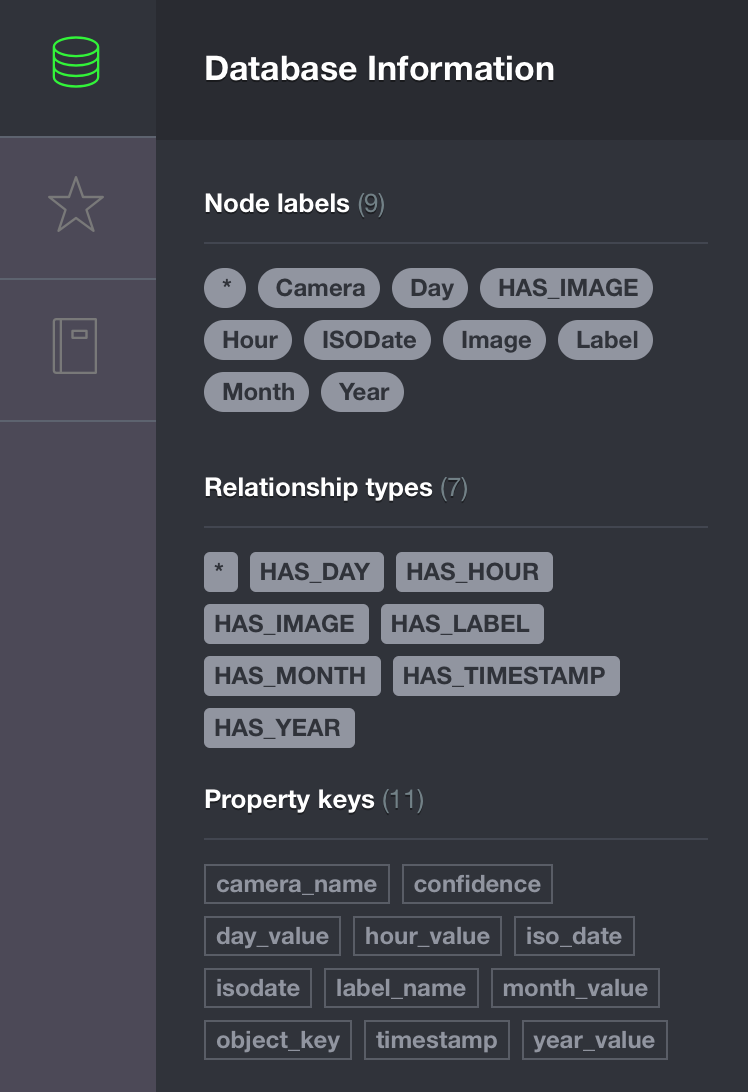

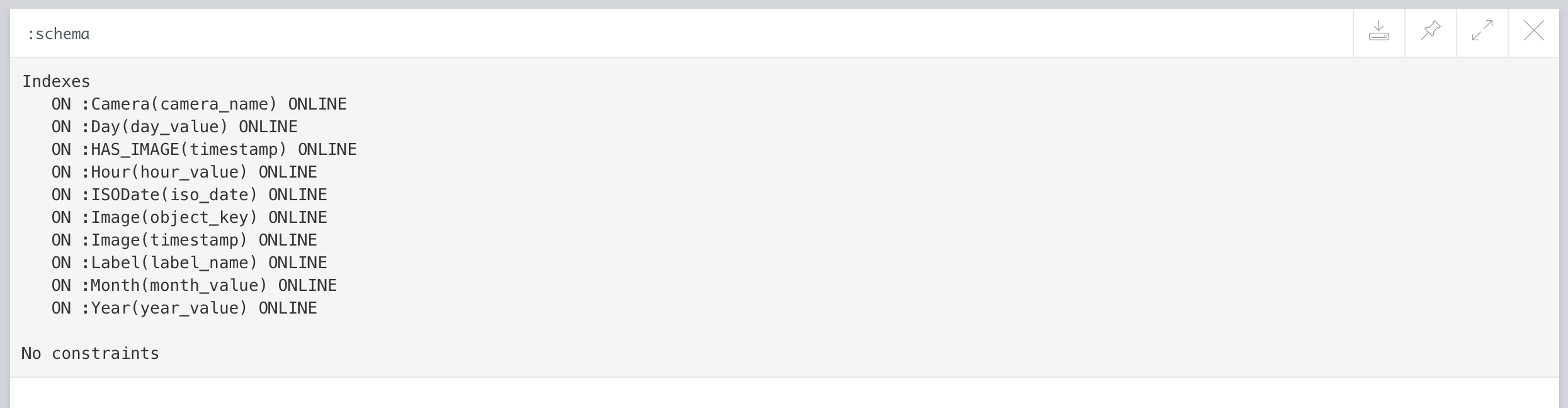

The graph is configured as follows – Labels, Relationship Types and Property Keys:

NOTE: Ignore the HAS_IMAGE label – that is there by mistake.

The following indexes are also created:

NOTE: Create the indexes before you import the table to the graph – it increases the performance of the MERGE statements dramatically.

Currently my graph holds 1489 unique image label nodes, 214k image nodes and 9 camera nodes. Yes, this is a dense node graph.

Initial Analysis

Since our goal was to find the images with a person in them to limit the set for review in any time period I’ll (for now) discuss the accuracy of Rekognition in locating people.

First off, I noticed immediately that as the confidence of the label drops below 90% the accuracy drops dramatically. The following analysis only considers the accuracy of labels with confidence greater than 90%.

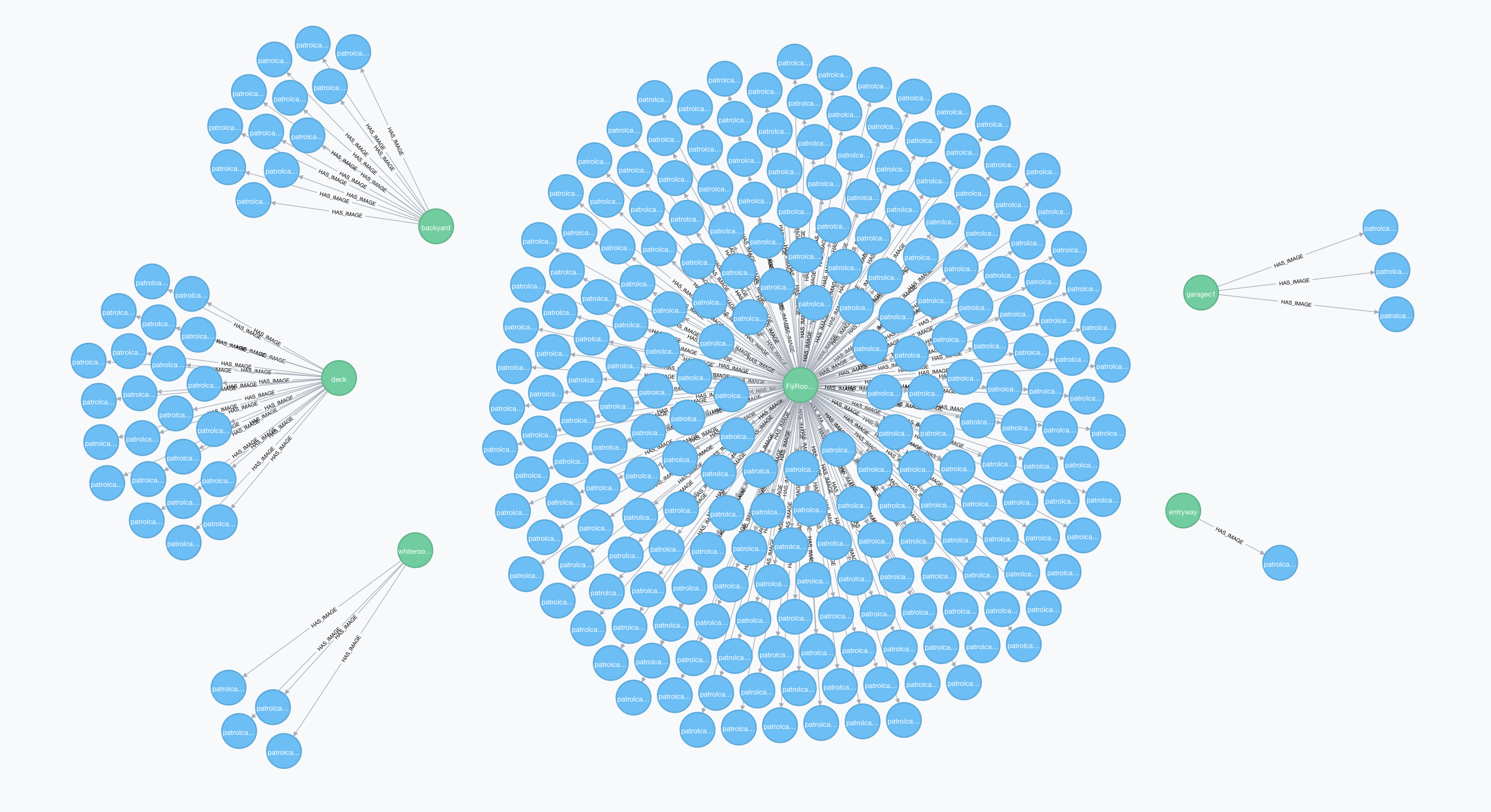

This is (essentially) a heat map of the images by camera found in the last 2 hours:

This was generated using the following cypher query:

match(person_label:Label {label_name:'Person'})-[label_edge:HAS_LABEL]-(image:Image)-[:HAS_IMAGE]-(camera:Camera) where label_edge.confidence > 90 and image.timestamp > ((timestamp()/1000) - 60*60*2) return camera, image

Using the results from this query (specifically the S3 Object pointer on the Image Nodes) we can sample some of these images and see how accurate the labeling is.

Here is a good positive result – that is me in the garage. Excellent. As a matter of fact, the false positive rate for people is quite good (again, only when considering confidence values over 90%).

Here is a good positive result – that is me in the garage. Excellent. As a matter of fact, the false positive rate for people is quite good (again, only when considering confidence values over 90%).

What became immediately clear, however, was the rate of false negatives. Consider the image below:

There is clearly a person in this image. As a matter of fact, this is exactly the kind of image I’m interested in – a person near the door. However, a quick inspection of the labels shows that none of the labels are more than 90% confident – and most of them are… odd. The key is, however, that there are hundreds of these false negatives. That means when I look at images that Rekognition say have a person in them – they do, but there are many that do that do not have the label and have a person in them.

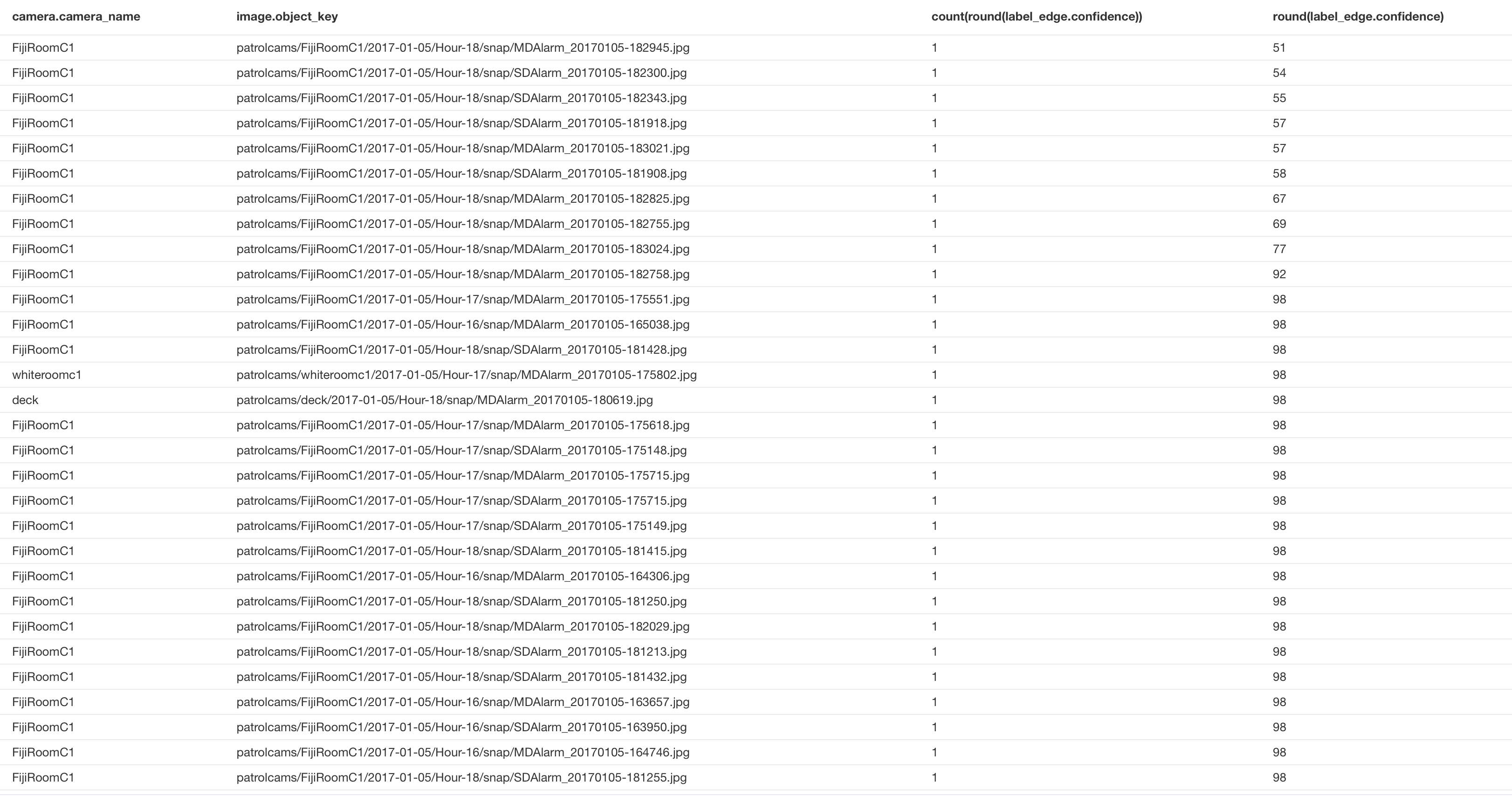

This is borne out by the data:

This data was generated using the following cypher query:

match(person_label:Label {label_name:'Person'})-[label_edge:HAS_LABEL]-(image:Image)-[:HAS_IMAGE]-(camera:Camera) where image.timestamp > ((timestamp()/1000) - 60*60*2) return camera.camera_name, image.object_key, count(round(label_edge.confidence)), round(label_edge.confidence) order by round(label_edge.confidence) asc

There are very few images – only 9 of the 280 images in the last 2 hours – that have confidence under 90%, in fact, only 10 of the 280 have confidence under 98%. In other words, Rekognition’s confidence in finding people is either very, very confident – or not confident at all – which results in many, many false negatives.

Given that, I’ve concluded that Rekognition isn’t suitable for my use case (and I assume the use case many people will have with security camera images). That being said, I’m sure the AWS team will jump on this and (using my, and others data) as training data greatly improve the person detection offered by Rekognition.

Hi Brian, thanks for your post it is very interesting. I am specialized in ip cctv and looking for image anaslysis services. i am located closed to Paris and French (nobody is perfect). I have done a couple of test with AWS and it looks that it is the best service to get evidence on motion detection events. However if the human body is not on the foreground of the image the labels are false. My strengh is not image processing and i am curious to see the result with opencv. Best regards. Guy